Canny Edge Detection

This summer I will be implementing the Canny Edge Detector in python.

Edge Detection? What? Why?

What - Edge detection in image processing is a tool which detects areas in images with sudden change in brightness.

Why - Very useful in computer vision all types of imaging tasks. Used to reduce the amount of data in an image and preserve only the important ones for further processing.

What is expected from the algorithm?

It must be optimal fulfilling to these criteria:

1. Good Detection - Probability to detect 'real' edges should be maximized and probability to detect 'unreal' edges must be minimized. (MAXIMUM Signal To Noise RATIO)

2. Good Localization - Detection edges should be as close as possible to real edges.

3. Edges count -One real edge should correspond to only one detection edge.

Implementation

My implementation will be in python using the Scipy module less and mathematics more. This is because I want to learn about it. I will be updating this section this summer frequently.

Test Image

Imports:

import scipy.ndimage as ndi import scipy import numpy import Image import math

Edit: Done till sobel edge detection.

STEP: NOISE REDUCTION 1. The first step is to change the image to b/w which is already done in our image.

2. Then we store the image in a numpy array.

3. Apply a gaussian filter to the image to make it smooth. This is because this will remove grains from the image with sigma=1.4.

sigma = 1.4

f = 'lena_std.jpg.jpg'

img = Image.open(f).convert('L') #grayscale

imgdata = numpy.array(img, dtype = float)

G = ndi.filters.gaussian_filter(imgdata, sigma)

STEP: Search the intensity gradient of the image

4. The edges of an image may point to different directions. So, we use the Sobel Operator to detect horizontal, vertical and diagonal edges.

What? Sobel? Whats that?

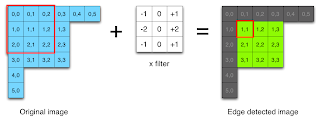

In simple terms, it calculates the gradient of the image intensity at each point. Edges in an image are actually places of sudden jump in intensity. It gives the largest change in light to dark and rate of change in that direction. It searches for maximum and minimum in first derivative of the image. It is used to find the absolute gradient of the image at each point in a b/w image. It uses 2 convolution masks for this. One is for X-direction and the other is for Y-direction. They are also known as kernels. If A is the image and Gx and Gy are two images which at each point contain the horizontal and vertical derivative approximations, the computations are as follows:

So, the magnitude is :

And, the direction is:

Edge[1][1] = (A[0][0]*-1)+(A[0][1]*0)+(A[0][2]*1)+(A[1][0]*-2)+(A[1][1]*0)+(A[1][2]*2)+ (A[2][0]*-1)(A[2][1]*0) +(A[2][2]*1)

Note: The convolution occurs just by sliding the kernels on each pixel. Thus the first one to be convolved is A[1][1]. The rows and columns at the corners of the image are not convolved. This is because the center of the kernel cannot reach them. The image below describes this:

Now, the code for this.

sobelout = Image.new('L', img.size) #empty image

gradx = numpy.array(sobelout, dtype = float)

grady = numpy.array(sobelout, dtype = float)

sobel_x = [[-1,0,1],

[-2,0,2],

[-1,0,1]]

sobel_y = [[-1,-2,-1],

[0,0,0],

[1,2,1]]

width = img.size[1]

height = img.size[0]

for x in range(1, width-1):

for y in range(1, height-1):

px = (sobel_x[0][0] * G[x-1][y-1]) + (sobel_x[0][1] * G[x][y-1]) + \

(sobel_x[0][2] * G[x+1][y-1]) + (sobel_x[1][0] * G[x-1][y]) + \

(sobel_x[1][1] * G[x][y]) + (sobel_x[1][2] * G[x+1][y]) + \

(sobel_x[2][0] * G[x-1][y+1]) + (sobel_x[2][1] * G[x][y+1]) + \

(sobel_x[2][2] * G[x+1][y+1])

py = (sobel_y[0][0] * G[x-1][y-1]) + (sobel_y[0][1] * G[x][y-1]) + \

(sobel_y[0][2] * G[x+1][y-1]) + (sobel_y[1][0] * G[x-1][y]) + \

(sobel_y[1][1] * G[x][y]) + (sobel_y[1][2] * G[x+1][y]) + \

(sobel_y[2][0] * G[x-1][y+1]) + (sobel_y[2][1] * G[x][y+1]) + \

(sobel_y[2][2] * G[x+1][y+1])

gradx[x][y] = px

grady[x][y] = py

sobeloutmag = scipy.hypot(gradx, grady)

sobeloutdir = scipy.arctan2(grady, gradx)

for x in range(width):

for y in range(height):

if (sobeloutdir[x][y]<22.5 and sobeloutdir[x][y]>=0) or \

(sobeloutdir[x][y]>=157.5 and sobeloutdir[x][y]<202.5) or \

(sobeloutdir[x][y]>=337.5 and sobeloutdir[x][y]<=360):

sobeloutdir[x][y]=0

elif (sobeloutdir[x][y]>=22.5 and sobeloutdir[x][y]<67.5) or \

(sobeloutdir[x][y]>=202.5 and sobeloutdir[x][y]<247.5):

sobeloutdir[x][y]=45

elif (sobeloutdir[x][y]>=67.5 and sobeloutdir[x][y]<112.5)or \

(sobeloutdir[x][y]>=247.5 and sobeloutdir[x][y]<292.5):

sobeloutdir[x][y]=90

else:

sobeloutdir[x][y]=135

STEP: Non Maximum SuppressionFor each image pixel in the direction matrix, if corresponding pixel of the magnitude image is less than its diagonals, vertical or horizontal pixels we make that pixel 0. Code below is easy to understand.

for x in range(1, width-1):

for y in range(1, height-1):

if sobeloutdir[x][y]==0:

if (sobeloutmag[x][y]<=sobeloutmag[x][y+1]) or \

(sobeloutmag[x][y]<=sobeloutmag[x][y-1]):

mag_sup[x][y]=0

elif sobeloutdir[x][y]==45:

if (sobeloutmag[x][y]<=sobeloutmag[x-1][y+1]) or \

(sobeloutmag[x][y]<=sobeloutmag[x+1][y-1]):

mag_sup[x][y]=0

elif sobeloutdir[x][y]==90:

if (sobeloutmag[x][y]<=sobeloutmag[x+1][y]) or \

(sobeloutmag[x][y]<=sobeloutmag[x-1][y]):

mag_sup[x][y]=0

else:

if (sobeloutmag[x][y]<=sobeloutmag[x+1][y+1]) or \

(sobeloutmag[x][y]<=sobeloutmag[x-1][y-1]):

mag_sup[x][y]=0

Choose two thresholds, one low(tl) and another high(th). This depends on the image. Create two blank images(gnh and gnl). Gnlhwill store pixels of non maximum suppression which are >= th and the other with store pixels which are >=tl. Thus gnl will contain all features of gnh. Now, to normalize the edges we do gnl = gnl-gnh. After this step, we follow these steps as given by canny:

a. Locate the next unvisited edge pixel p, in gnh.

b. Mark as valid edge pixels all the weak pixels in gnl that are connected to p by 8 connectivity.

c. If all non zero pixels in gnh have been visited, STOP.

Gnh will give the final image.

The code for this is:

m = numpy.max(mag_sup)

th = 0.2*m

tl = 0.1*m

gnh = numpy.zeros((width, height))

gnl = numpy.zeros((width, height))

for x in range(width):

for y in range(height):

if mag_sup[x][y]>=th:

gnh[x][y]=mag_sup[x][y]

if mag_sup[x][y]>=tl:

gnl[x][y]=mag_sup[x][y]

gnl = gnl-gnh

def traverse(i, j):

x = [-1, 0, 1, -1, 1, -1, 0, 1]

y = [-1, -1, -1, 0, 0, 1, 1, 1]

for k in range(8):

if gnh[i+x[k]][j+y[k]]==0 and gnl[i+x[k]][j+y[k]]!=0:

gnh[i+x[k]][j+y[k]]=1

traverse(i+x[k], j+y[k])

for i in range(1, width-1):

for j in range(1, height-1):

if gnh[i][j]:

gnh[i][j]=1

traverse(i, j)

The output I get after this is:Hope you liked it. Please comment. I need your views to improve. Thank You.

Code: github repo

ImageSet: Imgur

well done bro...carry on.. :)

ReplyDeletethanx. :)

DeleteHey, what you've done here is awesome! Definitely the most helpful breakdown of the Canny Edge Detection method I have seen so far. You might be able to help me with some advice actually.

ReplyDelete*I know this is a crazy explanation but hopefully you'll bare with me*

I'm designing a system for a class where I intend to allow users to upload an image of a sketched webpage design (which may include various design elements outside of your typical containers i.e. nav bar, content container, header, footer, etc.) and then take the data from this image to produce the appropriate HTML and CSS code. I intend to use the Canny Edge Detection method during a stage of the process to weed out any unnecessary design elements so that I am left with simple black and white bounding boxes. I'd then use the data from the simplified image to define separate bounding boxes as HTML div elements. I intend to do all this of course with Python and possibly the SciKit toolset found at http://scikit-image.org/ My question is this: Does the Canny Edge Detector provide the pixel data of the resulting edges? Or will I need to use a separate method to extract the pixel data?

To put it simply: If I use the Edge Detection method and I am left with a black background and 2 white squares as my image, I need to be able to determine the size of each square as well as tell each square apart from the other. Any ideas?

Thanks in advance for any input.

This is very helpful. The code is written in easy to understand form.

ReplyDeleteI hope it also had another version with vectorize operations, rather than loops, that works faster!

Good job !

ReplyDeleteThis is a good start, and a useful resource for me. However, there are some mistakes in your code. First, atan2 returns an angle measure in radians between -pi and pi, so your quantizing of sobeloutdir needs to be updated to take this into account.

ReplyDeleteSecond, the your mag_sup loop, the angle directions you are traversing are 90, 135, 0, then 45, as opposed to the 0, 45, 90, 135 you have written.

Making only these changes to your code, the edge detection on your image produced this one: http://imgur.com/YlOwQSE

hi, I was wondering about the same that you pointed out. will you be able to publish your source code?

Deletethat's awesome. thanks for upload this

ReplyDeleteHello everybody, we are trying to control the Threshold feature using Matlab code for Sobel method. we are not sure how to make the changes in the Threshold situaton - Threshold controller.

ReplyDeleteIf you take the edge's magnitude too high then the Threshold you set does not matters and that way the whole idea of controlling the edge definition is not valid. we can't get a fine results with the edge detection on some tests we've done and thats because of a wrong threshold defintion. there is no other way to solve this problem. you can see part of our code concerning the Thresold controller

hope you can upgrade our idea here and help us with our project.

[~, threshold] = edge(I, 'sobel');

fudgeFactor = handles.fudgeFactor;

BWs = edge(I,'sobel', threshold * fudgeFactor);

figure, imshow(BWs), title('binary gradient mask');

thanks alot! Tamir

hi Tamir, I Think you already have a solution for your problem.

ReplyDeletejust set the function edge to get another varible - lets call it 'fun_thresh', then the function will recognize your image as 'fun_thresh' all the way with your code.

now if u let the func. imshow to work before setting the threshold at any value then you will get shorter running time with your algorithm.

hope it's ok now

Kamat

I am getting a blank image after displaying the image. Here's my code -

ReplyDeletefinal = Image.fromarray(gnh)

final.save("final.png")

final.imshow()

I have the same issue, did you get yours resolved, if yes please let me know

DeleteI got it, it is fromarray(gnl), you got a blank image coz gnh has all zeros in it.

DeleteHi, very nice post.

ReplyDeleteI would like to see your opinion about something I think is related to Image Edge Detection.

Does it make sense to use Canny 1D for Time Series segmentation? I mean, to segment a 1D signal into segments which are separated by big variations.